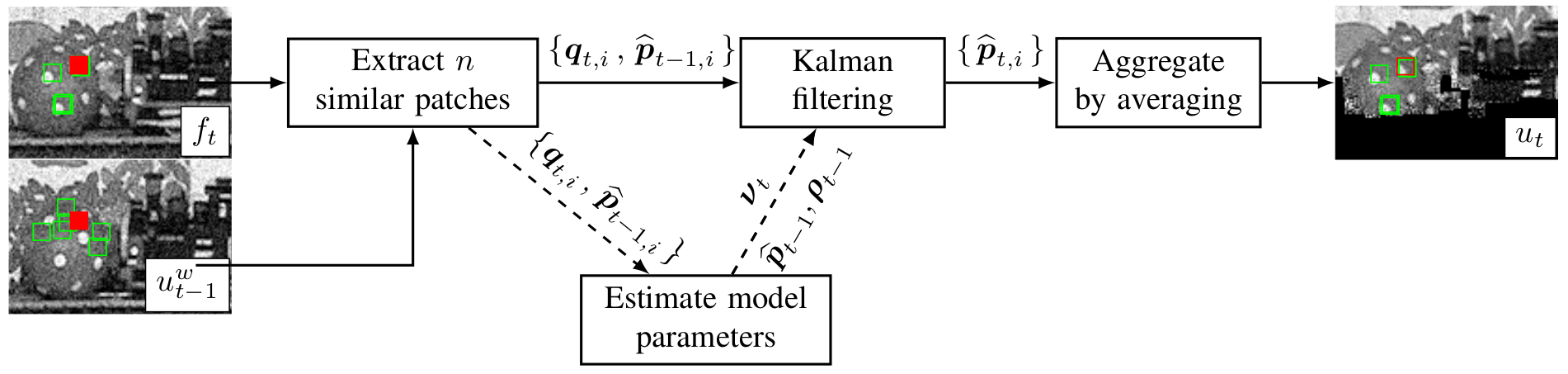

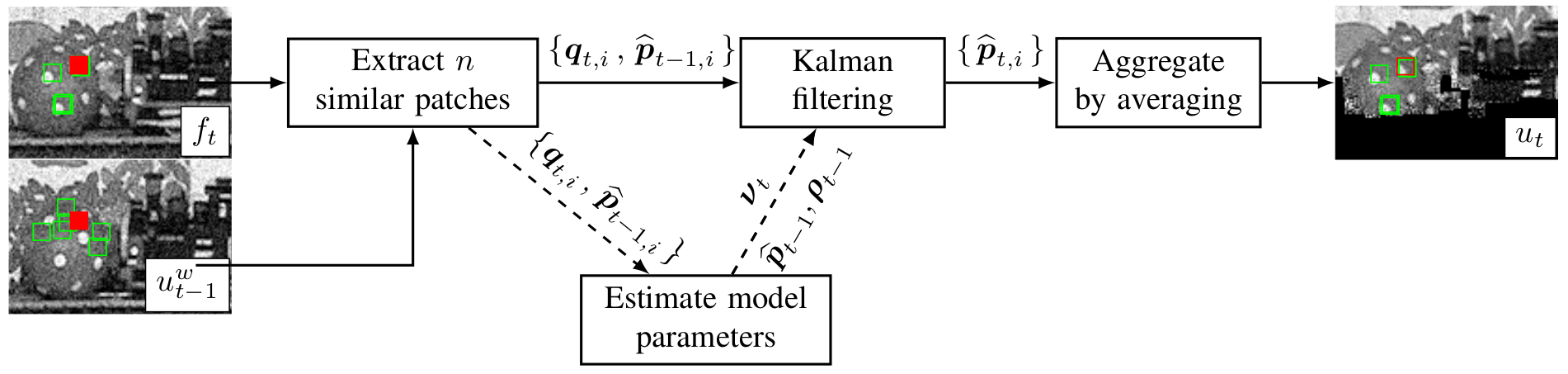

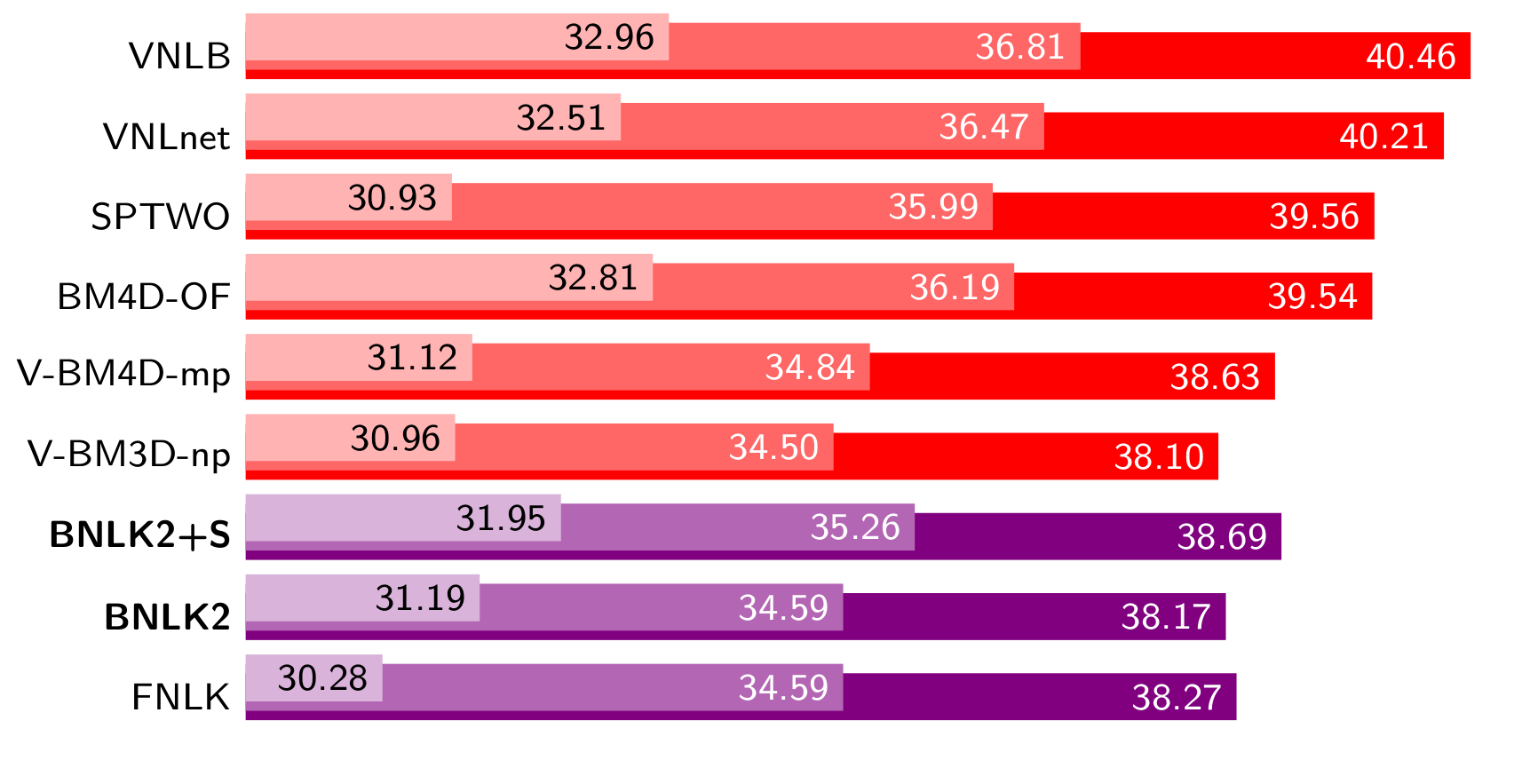

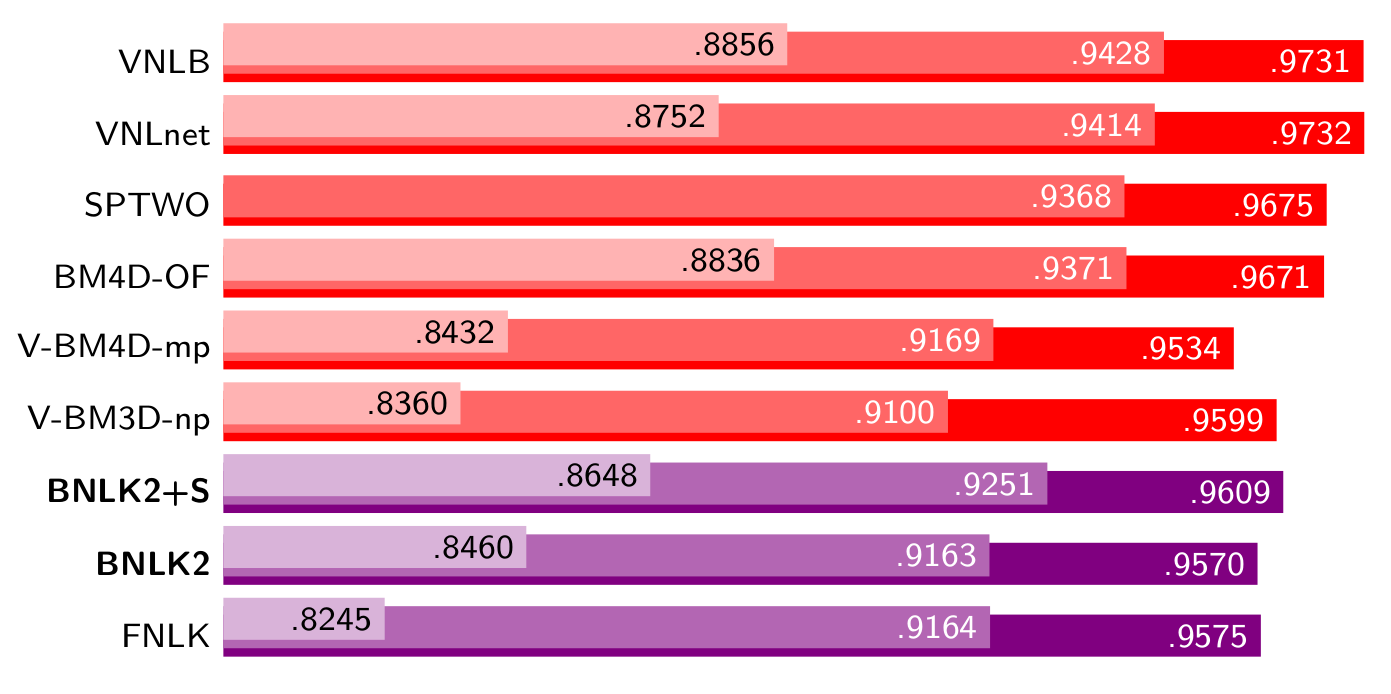

A frame recursive video denoising method computes each output frame as a function of only the current noisy frame and the previous denoised output. Frame recursive methods were among the earliest approaches for video denoising. However, in the last fifteen years they have been almost exclusively used for real-time applications but retaining a significantly lower denoising performance than the state-of-the-art. In this work we propose Backward Non-Local Kalman (BNLK), a simple frame recursive method which is fast, has a low memory complexity and competes with more complex state-of-the-art methods which require to process several input frames for producing each output frame. Furthermore, the proposed approach recovers many details that are missed by most non-recursive methods. As an additional contribution, we also propose an off-line post-processing of the denoised video that boosts denoising quality and temporal consistency.

This webpage contains the video denoising results shown in the paper, as well as links to our code and other related work.

paper|code |web P. Arias, J.-M. Morel, Kalman filtering of patches for frame-recursive video denoising, NTIRE CVPRW 2019. (methods BNLK2 and BNLK2+S)

preprint|code

A. Davy, T. Ehret, J.-M. Morel, P. Arias, G. Facciolo, Non-local video denoising by CNN. ICIP, 2019. (VNLnet method)

preprint|code

T. Ehret, A. Davy, J.-M. Morel, G. Facciolo, P. Arias, Model-blind video denoising via frame-to-frame training. CVPR, 2019.

paper|code|web

T. Ehret, P. Arias, J.-M. Morel, NL-Kalman: A recursive video denoising algorithm. ICIP, 2018. (method FNLK)

paper|web

P. Arias, G. Facciolo, J.-M. Morel, A comparison of patch-based models in video denoising. IVMSP, 2018. (method BM4D-OF)

paper|code|web

P.Arias, J.-M. Morel Video denoising via empirical Bayesian estimation of space-time patches. JMIV 60(1):70-93, 2017. (method VNLB)

paper

T. Ehret, P. Arias, J.-M. Morel, Global patch search boost video denoising. VISIGRAPP 2016.

We evaluated our method on seven 960x540 grayscale test sequences. The sequences were

taken from Derf's video database. The originals are RGB sequences of resolution 1920x1080. We converted

them to grayscale by averaging the channels and downscaled them.

We show results for two versions of our method: an on-line version denoted BNLK2 ("2" because

we apply two iterations) and an off-line post-processing called BNLK2+S.

We compared them against the following algorithms:

The following are links to the results obtained by the above methods for each sequence and noise level. We have splitted them by sequence and noise level and in non-recursive and recursive methods.

Noise-free sequences:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

Recursive methods (FNLK, BNLK2, BNLK2+S):

sigma 10:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

sigma 20:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

sigma 40:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

Non-recursive methods (VBM3D, VBM4D, SPTWO, VNLDCT, BM4D-OF, VNLB):

sigma 10:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

sigma 20:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

sigma 40:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

VNLnet sigmas 10, 20 and 40:

crowd

park joy

pedestrians

station

sunflower

touchdown

tractor

Work partly financed by IDEX Paris-Saclay IDI 2016, ANR-11-IDEX-0003-02, Office of Naval research grant N00014-17-1-2552, DGA Astrid project «filmer la Terre» no ANR-17-ASTR-0013-01, MENRT.